A graphical representation of the VOT of voiced, tenuis, and aspirated stops

Legacy: Decades of Discovery - 1960s

Katherine S. Harris working with Franklin Cooper and Peter MacNeilage were the first researchers in the U.S. to use electromyographic techniques, pioneered at the University of Tokyo, Research Institute of Logopedics and Phoniatrics (RILP), to study the neuromuscular organization of speech. They discovered that relations between muscle actions and phonetic segments are no simpler or more transparent than relations between acoustic signals and phonetic segments.

Leigh Lisker and Arthur Abramson looked for simplification at the level of articulatory action in the voicing of certain contrasting consonants (/b/, /d/, /g/ vs. /p/, /t/, /k/) [PDF]. They showed by acoustic measurements in eleven languages and by cross-language perceptual studies with synthetic speech that many acoustic properties of voicing contrasts arise from variations in voice onset time (VOT), that is, in the relative phasing of the onset of vocal cord vibration and the end of a consonant. Their work is widely replicated and elaborated, here and abroad, over the following decades.

Donald Shankweiler and Michael Studdert-Kennedy introduced dichotic listening into speech research, presenting different nonsense syllables simultaneously to opposite ears. They demonstrated dissociation of phonetic (speech) and auditory (nonspeech) perception by finding that phonetic structure devoid of meaning is an integral part of language, typically processed in the left cerebral hemisphere. Their work was replicated and developed in many laboratories over the following years.

Alvin Liberman, Cooper, Shankweiler, and Studdert-Kennedy summarized and interpreted fifteen years of research in “Perception of the Speech Code,” [PDF] still among the most cited papers in the speech literature. It set the agenda for many years of research at Haskins and elsewhere by describing speech as a code in which speakers overlap (or coarticulate) segments to form syllables. These units last long enough to be resolved by the ear of a listener, who recovers segments from syllables by means of a specialized decoder in the brain’s left hemisphere that is formed from overlapping input and output neural networks—a physiologically grounded “motor theory.”

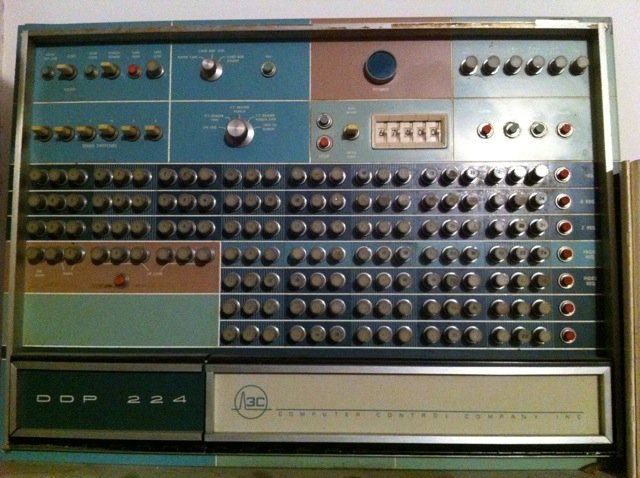

Haskins acquired its first computer (a Honeywell DDP224) and connected it to a speech synthesizer designed and built by the Laboratories’ engineers. Ignatius Mattingly, with British collaborators John N. Holmes and J. N. Shearme, adapted the Pattern Playback rules to write the first computer program for synthesizing continuous speech from a phonetically spelled input. A further step toward a reading machine for the blind combined Mattingly’s program with an automatic look-up procedure for converting alphabetic text into strings of phonetic symbols.

• 1930s

• 1940s

• 2000s

• 2010s

• 2020s

• 1970s

• 1980s

• 1990s